Artificial intelligence, particularly deep learning, is revolutionizing manufacturing quality control. Automated visual inspection (AVI) systems powered by AI promise higher accuracy, faster throughput, and greater consistency than manual inspection. However, realizing this promise hinges on one critical component: training data. And not just any data – vast amounts of high-quality, accurately labeled images representing all possible scenarios, especially defects.

This data requirement often becomes a major bottleneck. Collecting enough real-world images, particularly of rare but critical defects, can be prohibitively expensive, time-consuming, and sometimes simply impossible. Imagine trying to capture thousands of examples of a specific, infrequent manufacturing flaw – you might need to run production for weeks or months, potentially generating excessive scrap, just to gather enough data.

This is where synthetic data emerges as a game-changing solution. By digitally creating artificial, yet highly realistic images, we can overcome the limitations of real-world data acquisition and significantly accelerate the development and improve the performance of AI models for visual inspection.

The Real-World Data Dilemma in Manufacturing

Building a robust AI visual inspection model requires a dataset that is:

- Large: Deep learning models are data-hungry. They need thousands, sometimes millions, of examples to learn effectively.

- Diverse: The data must cover all expected variations in the product’s appearance – different lighting conditions, camera angles, part positioning, material finishes, and crucially, all types and severities of potential defects.

- Balanced: While normal (non-defective) parts are usually abundant, defect images are often scarce. An imbalanced dataset can lead to models that are excellent at identifying good parts but terrible at catching flaws.

- Accurately Annotated: Every image needs precise labels (bounding boxes, segmentation masks, classifications) indicating the location and type of any defects. Manual annotation is labor-intensive, expensive, and prone to human error and inconsistency.

Meeting these requirements with real-world data collection faces significant hurdles:

- Cost & Time: Setting up capture stations, running production lines, manually collecting and sorting images is expensive and slow. Capturing rare defects exacerbates this.

- Scarcity of Defects: Many manufacturing processes are highly optimized, meaning critical defects occur infrequently. Waiting to capture enough natural occurrences can stall AI development indefinitely.

- Production Disruption: Collecting data might require interrupting or altering normal production processes.

- Annotation Effort: Manually labeling thousands of images, especially with pixel-perfect segmentation masks for complex defects, requires significant expert time and cost.

- Inability to Capture Edge Cases: Some potential failure modes or environmental conditions might be too dangerous, expensive, or difficult to replicate physically for data capture.

Enter Synthetic Data: The Digital Twin for Training Data

Synthetic data, in this context, refers to artificially generated imagery created using computer graphics techniques (like those used in gaming and CGI) to mimic real-world inspection scenarios. Instead of physically capturing images, we simulate the product, its environment, the defects, and the image acquisition process.

How is it Generated for Visual Inspection?

The process typically involves several steps:

- 3D Modeling: Start with a high-fidelity 3D model of the product, often derived directly from CAD designs. This ensures geometric accuracy.

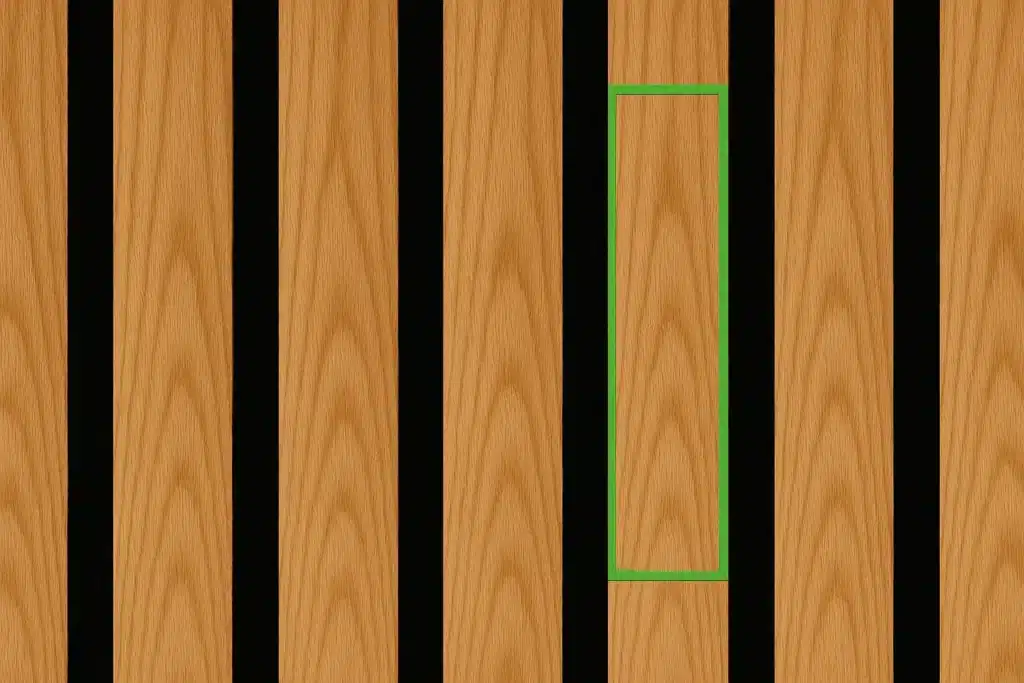

- Material & Texture Application: Apply realistic digital materials and textures to the 3D model to replicate its surface appearance (e.g., brushed metal, injection-molded plastic, painted surfaces).

- Defect Modeling: This is crucial. Defects (scratches, dents, cracks, contamination, misprints, assembly errors, etc.) are modeled digitally. This can range from applying procedural textures that mimic scratches to geometrically altering the 3D model to create dents or warping. Importantly, these defects can be parametrically controlled – their size, shape, location, and severity can be varied systematically.

- Virtual Scene Setup: Create a virtual environment that replicates the real-world inspection station. This includes modeling the camera (specifying lens type, resolution, sensor noise characteristics), lighting (type, position, intensity, color temperature), and part handling/positioning mechanisms.

- Rendering & Domain Randomization: Generate images by rendering the 3D scene from the virtual camera’s perspective. The key here is domain randomization: systematically varying parameters during rendering to create diverse data. This includes:

- Lighting: Changing intensity, color, position, number of lights.

- Pose: Altering the product’s position and orientation relative to the camera.

- Camera: Varying camera angle, distance, and even simulating lens distortions or sensor noise.

- Background: Changing background elements or textures.

- Defects: Randomizing defect type, location, size, and severity.

- Automated Annotation: Because the entire scene is digitally controlled, perfect ground-truth annotations (bounding boxes, segmentation masks, classifications) can be generated automatically and instantly during the rendering process. No manual labeling required!

The Unfair Advantages of Synthetic Data

Leveraging synthetic data offers compelling benefits that directly address the challenges of real-world data collection:

- Overcoming Scarcity: Generate virtually unlimited examples of any defect type, no matter how rare it is in reality. Need 10,000 images of a specific pinhole defect? Simulate it.

- Perfect Annotations, Instantly: Eliminate the costly, time-consuming, and error-prone manual annotation process. Synthetic data comes with pixel-perfect labels generated automatically.

- Total Control & Diversity: Systematically generate images covering all conceivable variations in lighting, pose, material, and defect characteristics. This ensures the AI model is exposed to a much wider range of scenarios than might be feasible to capture physically.

- Targeting Edge Cases: Create data for specific, hard-to-replicate scenarios or critical failure modes that are difficult or dangerous to produce in the real world.

- Cost-Effectiveness: While initial setup requires expertise in 3D modeling and rendering, the marginal cost of generating additional synthetic images is extremely low compared to collecting physical samples.

- Speed & Agility: Generate large datasets in hours or days, not weeks or months. This drastically accelerates AI model training, iteration, and deployment cycles.

- Enhanced Robustness: Training on diverse synthetic data, especially using domain randomization, helps models become less sensitive to minor variations in the production environment, leading to more robust performance.

Bridging the “Reality Gap”: Challenges and Best Practices

Synthetic data isn’t a magic bullet. The primary challenge is the “reality gap” – the difference between simulated images and real-world images. If the synthetic data isn’t realistic enough, the AI model might learn artifacts specific to the simulation and fail to generalize well to real inspection images.

Mitigating the reality gap requires:

- High-Fidelity Simulation: Investing in accurate 3D models, realistic material definitions (e.g., using Bidirectional Reflectance Distribution Functions – BRDFs), and physically plausible lighting simulations.

- Effective Domain Randomization: Intelligently varying parameters within realistic bounds helps the model focus on relevant features (defects) rather than simulation-specific details. The goal is to make the simulated variations harder than the real-world variations.

- Hybrid Approaches: The most effective strategy often involves combining synthetic and real data:

- Pre-training: Train the model initially on a large synthetic dataset to learn general features and defect characteristics.

- Fine-tuning: Fine-tune the pre-trained model on a smaller, targeted set of real-world images. This helps the model adapt to the nuances of the specific production environment.

- Augmentation: Mix synthetic images directly into the real dataset to boost the number of defect examples and overall diversity.

Synthetic Data and EasyODM: A Powerful Combination

Platforms like EasyODM are designed to simplify the deployment of AI for visual inspection. Synthetic data generation complements this mission perfectly. While EasyODM provides the tools to train and deploy models efficiently, synthetic data provides the fuel needed to make those models truly effective, especially when real defect data is scarce.

Imagine feeding EasyODM a dataset enriched with thousands of perfectly labeled synthetic defect examples alongside your available real images. This allows you to:

- Train robust models faster, even with limited real defect samples.

- Improve detection rates for rare but critical flaws.

- Reduce the time and cost associated with data collection and annotation.

- Iterate quickly on model improvements by generating new synthetic data variations as needed.

EasyODM is capable of leveraging datasets containing both real and synthetic images, allowing you to harness the power of this combined approach for superior inspection results.

The Future is Synthetically Enhanced

As simulation technology continues to advance, generating ever-more-realistic synthetic data will become easier and more accessible. We expect tighter integration between digital twin initiatives in manufacturing and synthetic data generation pipelines. Techniques like Generative Adversarial Networks (GANs) may also play a larger role in refining synthetic images or generating variations based on real data.

Conclusion

The data bottleneck is a significant hurdle in deploying AI for visual inspection. Synthetic data offers a powerful and increasingly viable solution, enabling the creation of large, diverse, and perfectly annotated datasets that overcome the limitations of real-world data collection. By simulating products, defects, and inspection environments with high fidelity and employing techniques like domain randomization, manufacturers can significantly accelerate AI development, improve model robustness, and ultimately enhance quality control. When combined with user-friendly AI platforms like EasyODM, synthetic data unlocks the full potential of automated visual inspection, paving the way for smarter, faster, and more reliable manufacturing.