The accuracy of machine vision systems is essential for dependable performance in quality control and inspection applications. Key performance metrics include precision and recall, which respectively minimize false positives and detect most defects.

A balanced F1 score further guarantees robust performance in different scenarios. The gauge R&R analysis helps measure and refine accuracy by evaluating repeatability and reproducibility.

High-resolution imaging, regular calibration, and advanced processing techniques greatly enhance detail detection and measurement precision. Ideal lighting and controlled environments also play a vital role in maintaining accuracy.

Discover more about the intricate details and methodologies that bolster machine vision reliability.

Evaluating Machine Vision Performance

Evaluating machine vision performance necessitates an extensive analysis of the confusion matrix to understand the distribution of true positives, true negatives, false positives, and false negatives. This analysis is instrumental in determining key performance metrics such as accuracy, precision, recall, and the F1 Score, all of which are vital for quality control in various applications.

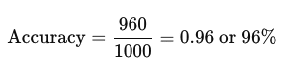

Accuracy is a fundamental metric, representing the ratio of correctly classified objects (true positives and true negatives) to the total number of inspected objects.

For instance, if a machine vision system correctly classifies 960 out of 1000 parts, the accuracy would be 96%. However, accuracy alone might not provide a thorough view of the system’s performance, especially in scenarios with imbalanced datasets.

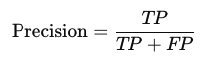

Precision and recall offer deeper insights into the classifier’s performance. Precision measures the accuracy of positive predictions and is calculated as true positives divided by the sum of true positives and false positives. For example, a high precision indicates fewer false positives, which is essential in minimizing the acceptance of defective parts.

Precision measures the accuracy of positive predictions and is calculated as:

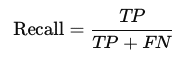

Recall, on the other hand, measures the ability of the classifier to identify all relevant instances and is calculated as true positives divided by the sum of true positives and false negatives. High recall guarantees that most defects are detected, reducing the likelihood of defective parts passing undetected.

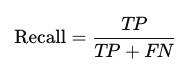

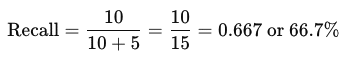

Recall assesses the classifier’s ability to identify all relevant instances and is calculated as:

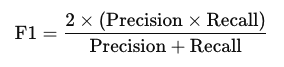

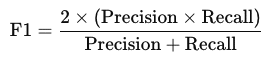

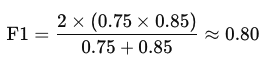

The F1 Score harmonizes precision and recall into a single metric, providing a balanced measure of the system’s effectiveness. It is particularly useful in scenarios where a balance between precision and recall is desired. The F1 Score is calculated as:

A high F1 Score reflects a robust machine vision system adept at both recognizing true positives and minimizing false positives, thereby improving overall quality control.

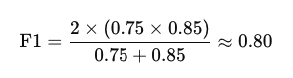

For example, if a classifier has a precision of 0.75 and recall of 0.85, the F1 Score would be approximately:

Understanding Confusion Matrix

To accurately assess the performance of a machine vision system, it is essential to understand the confusion matrix, a tool that visualizes the classifier’s predictions against actual outcomes. The confusion matrix is a fundamental component in evaluating the effectiveness of machine vision systems, providing a detailed breakdown of how well the system classifies objects.

A confusion matrix consists of four main components: True Positives (TP), True Negatives (TN), False Positives (FP), and False Negatives (FN). These components are vital for deriving various performance metrics that offer insights into the system’s accuracy and precision.

- True Positives (TP): Instances where the classifier correctly identifies the presence of an object or feature.

- True Negatives (TN): Instances where the classifier correctly identifies the absence of an object or feature.

- False Positives (FP): Instances where the classifier incorrectly identifies the presence of an object or feature.

- False Negatives (FN): Instances where the classifier fails to identify the presence of an object or feature.

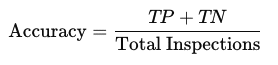

The accuracy of a machine vision system can be calculated using the formula:

This provides a straightforward measure of the overall correctness of the system.

Calculating System Accuracy

Calculating system accuracy in machine vision involves determining the ratio of correct classifications, represented by the sum of true positives and true negatives, to the total number of inspections conducted. This metric, defined by the formula:

Accuracy is essential for understanding the effectiveness of a machine vision system in correctly identifying objects or defects.

System accuracy is greatly influenced by several factors, including accuracy and repeatability, which guarantee consistent performance across multiple inspections. A high-precision measuring system is necessary to achieve the desired accuracy, often within a range of 0.000050 to 0.0001, depending on the specific application. Accurate calibration is also critical in this situation, as it aligns measured values with standard values, thereby improving the repeatability of measurements.

The resolution in machine vision systems plays a pivotal role in achieving high accuracy. According to the Nyquist-Shannon theorem, the pixel resolution must be at least double the frequency of the details being detected. This requirement guarantees that the system can accurately capture fine details, thereby reducing errors. For instance, utilizing high-resolution, low-noise imaging components can greatly boost system accuracy by minimizing measurement errors caused by external factors.

Furthermore, the pixel resolution directly impacts the system’s ability to discern small features. Higher pixel resolution improves the system’s ability to detect and classify objects accurately, thereby enhancing overall system performance. Continuous evaluation and adaptation of these components are essential to maintain and improve the accuracy and repeatability of machine vision systems.

Importance of Precision

Precision in machine vision systems is essential for guaranteeing the consistency and reliability of measurements, thereby improving overall system performance. The accuracy of the machine is notably improved when precision is prioritized, as it minimizes the variability of repeated measurements and guarantees that results remain consistent over time. High precision is particularly important in applications such as metrology, where even the slightest deviation can lead to substantial errors.

Achieving sub-pixel precision in machine vision systems allows for the detection of fine structures and minute defects, greatly improving the quality of inspections. Sub-pixel precision involves measuring details that are smaller than the size of a single pixel, which provides more granular and accurate data. This level of precision is fundamental for industries that require stringent quality control and high-resolution imaging.

Key factors that influence precision in machine vision systems include:

- Calibration: Proper calibration of imaging components, such as cameras and lenses, is critical. Accurate calibration guarantees that the alignment and measurement scales are correct, reducing the risk of substantial errors in measurement outcomes.

- Component Quality: The quality of cameras, lenses, and other imaging components directly impacts the precision of measurements. High-quality components with minimal distortion and high resolution are necessary for achieving accurate results.

- Environmental Stability: Factors such as lighting conditions and temperature fluctuations can affect the precision of machine vision systems. Maintaining a stable environment reduces variability and improves measurement reliability.

Measuring Recall

Recall is an important metric for evaluating a classifier’s ability to identify all relevant instances of a specific class, particularly in quality control applications. In machine vision systems, recall measures the proportion of actual defective items that the system correctly identifies. It is calculated as:

For instance, if a machine vision system identifies 10 out of 15 defective parts, the recall would be:

This metric is essential for vision applications where missing a defect can have significant consequences, such as in manufacturing processes. A high recall indicates that the system successfully identifies most defective items, demonstrating its effectiveness in thorough defect detection.

The accuracy of the system regarding recall is crucial, as it guarantees that the majority of defective products are identified and removed from the production line. However, recall must be balanced with precision to maintain an acceptable rate of false positives. The average value of recall across multiple instances provides a clear indication of the system’s overall performance in detecting defects.

F1 Score Explained

The F1 Score serves as a critical metric for evaluating the balance between precision and recall in a machine vision classifier, providing an extensive measure of its performance. This metric is particularly valuable in scenarios involving binary classification, where the classifier must distinguish between two classes, such as ‘defects’ and ‘no defects’. The F1 Score is derived from the harmonic mean of precision and recall, ensuring that neither metric disproportionately influences the final score.

To calculate the F1 Score, use the formula:

This approach balances the trade-off between precision, the accuracy of positive predictions, and recall, the classifier’s ability to identify all positive instances.

For example, with a Precision of 0.75 and a Recall of 0.85, the F1 Score is:

This indicates the classifier’s overall performance in correctly identifying defective items while minimizing false positives and false negatives.

The F1 Score is particularly useful when dealing with imbalanced datasets, ensuring that the minority class, often defects in manufacturing scenarios, is adequately identified. Key advantages of using the F1 Score include:

- Balanced Performance Measurement: It provides a single metric that considers both precision and recall, offering a more holistic view of classifier performance.

- Imbalanced Data Handling: It is especially effective in scenarios where class distributions are unequal, ensuring that the classifier’s ability to detect the minority class is not overlooked.

- Performance Enhancement: By focusing on both precision and recall, the F1 Score helps in fine-tuning the classifier to achieve peak performance in real-world applications.

Gauge R&R Significance

Building on the significance of balanced performance metrics such as the F1 Score, Gauge Repeatability and Reproducibility (Gauge R&R) plays an essential role in guaranteeing the reliability of machine vision systems by evaluating the precision and accuracy of the measurement tools involved. Gauge R&R is fundamental for verifying that variations in measurements stem from the process itself rather than inconsistencies in the measurement systems, thereby underpinning quality control.

A well-conducted Gauge R&R study is imperative; ideally, less than 10% of the total variability should be attributed to measurement error, confirming the robustness of the machine vision system. This study involves multiple operators measuring the same set of parts multiple times to assess two key components: repeatability and reproducibility. Repeatability denotes the variation when the same operator measures the same part repeatedly, while reproducibility refers to the variation between different operators performing the same measurements.

Gauge R&R results are instrumental in detecting whether the measurement systems can discern changes in the manufacturing process. This capability is critical for maintaining high standards of quality control in environments where machine vision systems are implemented. Continuous monitoring and periodic re-evaluation of Gauge R&R are recommended, especially when modifications occur in the production process or measurement equipment. This practice guarantees ongoing measurement system integrity and sustains the reliability of the machine vision system.

Optimizing Vision Systems

Achieving ideal accuracy in machine vision systems demands a strategic combination of high-resolution imaging, meticulous calibration, and advanced algorithmic processing. Vision systems ability to detect fine details hinges on the integration of high-resolution, low-noise monochrome cameras, which greatly enhance sensitivity and detail detection. Utilizing a higher resolution guarantees that even minute features are captured, allowing for more precise measurements and improved overall accuracy.

Pixel size plays a significant role in this context. Smaller pixel sizes in sensors can capture finer details, but balancing this with sensitivity is essential to avoid noise. Implementing telecentric lenses further enhances accuracy by minimizing perspective distortion, thereby maintaining consistent object sizes across the imaging field, which is essential for precise measurements.

Calibration of the vision system is another important factor. The system must be aligned with measurement standards, confirming that its accuracy is within one-third of the tolerance band. This meticulous calibration process is necessary to maintain reliable outcomes and enhance the vision system’s ability to perform under varying conditions.

Incorporating advanced image processing algorithms, such as sub-pixel processing techniques, can greatly improve measurement precision. These algorithms allow detection beyond the limits of pixel resolution, enabling more accurate image analysis and feature detection. For applications requiring color differentiation, a high-performance colour camera can be instrumental, as it provides additional data layers that enhance the system’s analytical capabilities.

Regular evaluation of lighting conditions and ambient control is imperative. Ideal lighting minimizes image washout and enhances contrast, directly impacting the accuracy of the measurements. By focusing on these aspects, machine vision systems can be optimized to achieve superior accuracy and reliability in various applications.

Practical Application Insights

In practical applications, the optimization strategies discussed previously are implemented to achieve the stringent accuracy requirements demanded by high-precision tasks. Machine vision systems are essential in various industrial applications where measurement accuracy is fundamental, particularly in dimensional checks and quality control processes.

High-resolution imaging is a cornerstone of these systems. The use of CCD sensors, known for their superior image quality and sensitivity, plays a significant role in achieving precise measurements. These sensors often feature a higher fill factor, which guarantees that a greater portion of the sensor area is used for light detection, thereby improving the overall resolution and accuracy of the captured images.

To maintain and improve measurement accuracy, several practical steps are necessary:

- Calibration Procedures: Regular and precise calibration guarantees that the machine vision system’s measurements are accurate and consistent with standard values. This is essential for applications requiring repeatability within tight tolerance bands, such as .0001.

- Subpixel Processing Techniques: Implementing subpixel algorithms allows for greater precision in detecting fine details, achieving a precision level of 1:10. This technique considerably improves the system’s ability to identify and measure minute features that are critical in high-precision tasks.

- Environmental Control: Maintaining a controlled environment minimizes external factors that could affect the vision system’s performance. This includes managing lighting conditions, temperature, and vibrations, all of which can influence the accuracy of measurements.

Continuous advancements in machine vision technology, coupled with these practical applications, guarantee that systems can meet the high accuracy and resolution demands of modern industrial processes. By integrating high-quality CCD sensors, employing subpixel processing, and maintaining rigorous calibration and environmental controls, machine vision systems can achieve the necessary measurement accuracy for high-precision applications.

Conclusion

To summarize, the evaluation of machine vision systems is essential for ensuring precise measurements and effective image analysis. Adherence to the Nyquist-Shannon theorem, along with continuous optimization of components, advanced algorithms, and controlled environments, greatly enhances system accuracy.

Key performance metrics—accuracy, precision, recall, and F1 score—provide thorough insights into classifier effectiveness. Through ongoing refinement and practical applications, the capability of machine vision systems to detect fine structures and patterns can be continuously improved.